Centos8.3部署kubernetes:v1.12.3

概述[1]

Kubernetes 是什么?

Kubernetes 是一个可移植的,可扩展的开源平台,用于管理容器化的工作负载和服务,方便了声明式配置和自动化。它拥有一个庞大且快速增长的生态系统。Kubernetes 的服务,支持和工具广泛可用。

Kubernetes 组件

Kubernetes 集群由代表控制平面的组件和一组称为节点的机器组成。

Kubernetes API

Kubernetes API 使你可以查询和操纵 Kubernetes 中对象的状态。 Kubernetes 控制平面的核心是 API 服务器和它暴露的 HTTP API。 用户、集群的不同部分以及外部组件都通过 API 服务器相互通信。

使用 Kubernetes 对象

Kubernetes 对象是 Kubernetes 系统中的持久性实体。Kubernetes 使用这些实体表示您的集群状态。了解 Kubernetes 对象模型以及如何使用这些对象。

架构及准备

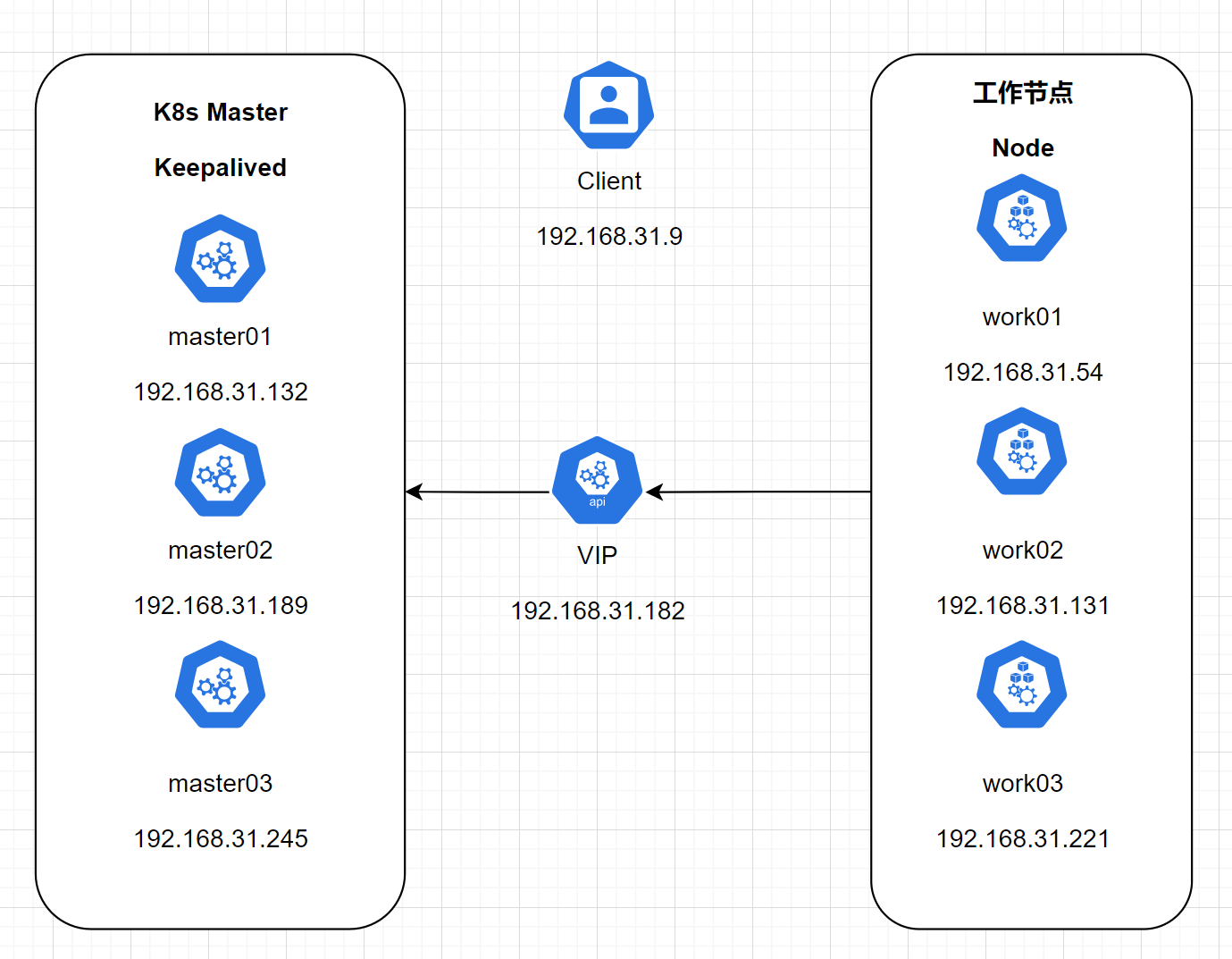

介绍拓扑

本次将使用三台服务器作为k8s的master,并使用keepliaved为k8s master提供高可用。拓扑如下:

详情:

其中K8s Master三台服务器额外安装Keepalived提供高可用,工作节点安装docker和k8s即可。客户机只是用来安装前测试的或者ansible的主机,用来分发配置文件核命令的,所以这次拓扑中可以不需要客户机 其中的k8s及docker服务也可以选装。

软件版本:Docker版本:20.10.10 Kubernetes版本:1.22.3 Keepalived v2.1.5

均使用的是当前最新版,所以在以下yum安装部分服务的时候没有指定其版本号。

服务器信息:4核4GB 操作系统:Centos8.3.2011 x86_64

-

apiserver 通过keepalived实现高可用,当某个节点故障时触发keepalived vip 转移;

-

controller-manager k8s内部通过选举方式产生领导者(由–leader-elect 选型控制,默认为true),同一时刻集群内只有一个controller-manager组件运行;

-

scheduler k8s内部通过选举方式产生领导者(由–leader-elect 选型控制,默认为true),同一时刻集群内只有一个scheduler组件运行;

-

etcd 通过运行kubeadm方式自动创建集群来实现高可用,部署的节点数为奇数,3节点方式最多容忍一台机器宕机。

摘自[2]

系统准备

前期工作大部分都是所有服务器都要执行的操作,推荐使用xshell打开撰写窗口 并设置发送到所有会话;或者使用ansible提高效率。

关闭防火墙

#关闭selinux

setenforce 0

#永久生效 修改config第6行如下

cat /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled #<----修改为disabled,注意一定不要写错 会导致无法开机

# SELINUXTYPE= can take one of these three values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

#关闭firewalld

systemctl stop firewalld

systemctl disable firewalld

#关闭iptables

iptables -F

如果不能关闭防火墙,请开放以下端口[3]

控制平面节点

| 协议 | 方向 | 端口范围 | 作用 | 使用者 |

|---|---|---|---|---|

| TCP | 入站 | 6443 | Kubernetes API 服务器 | 所有组件 |

| TCP | 入站 | 2379-2380 | etcd 服务器客户端 API | kube-apiserver, etcd |

| TCP | 入站 | 10250 | Kubelet API | kubelet 自身、控制平面组件 |

| TCP | 入站 | 10251 | kube-scheduler | kube-scheduler 自身 |

| TCP | 入站 | 10252 | kube-controller-manager | kube-controller-manager 自身 |

| 协议 | 方向 | 端口范围 | 作用 | 使用者 |

|---|---|---|---|---|

| TCP | 入站 | 10250 | Kubelet API | kubelet 自身、控制平面组件 |

| TCP | 入站 | 30000-32767 | NodePort 服务† | 所有组件 |

设置固定IP

#编辑网卡文件,其中ifcfg-ens192更改为你的网卡名。如下:

cat /etc/sysconfig/network-scripts/ifcfg-ens192

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=none #更改为手动

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens192

UUID=44c927a6-3c74-41bd-b1bd-538dee847687

DEVICE=ens192

ONBOOT=yes

#设置固定地址

IPADDR="192.168.31.132"

PREFIX="24"

GATEWAY="192.168.31.1"

DNS1="192.168.31.1"

或者在网关设置MAC地址绑定,反正需要保证服务器IP不会变动。

设置并分发主机名

hostnamectl set-hostname master01

#不同主机设置不同的主机名,并在以下文件中配置对应关系。

cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.31.9 master-server #是client主机

192.168.31.132 master01

192.168.31.189 master02

192.168.31.245 master03

192.168.31.54 work01

192.168.31.131 work02

192.168.31.221 work03

#使用ansible或者scp分发hosts文件

ansible k8s -m copy -a 'src=/etc/hosts dest=/etc/hosts'

关闭swap

swapoff -a

#临时关闭swap后,在/etc/fstab中注释swap行防止下次重启后自动挂载交换分区,如下:

cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Thu Nov 11 06:50:54 2021

#

# Accessible filesystems, by reference, are maintained under '/dev/disk/'.

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info.

#

# After editing this file, run 'systemctl daemon-reload' to update systemd

# units generated from this file.

#

UUID=400cf56c-93c5-47bb-be5f-49eed4d7dfcd / xfs defaults 0 0

UUID=10ea0953-4559-488b-b4f1-d3930d41e1fd /boot xfs defaults 0 0

UUID=D453-6E64 /boot/efi vfat umask=0077,shortname=winnt 0 2

#UUID=9c58fb61-66c7-4694-b34d-579e27d41d28 none swap defaults 0 0

内核配置

#新建配置文件k8s.conf内容如下

cat /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

#查看br_netfilter模块

lsmod |grep br_netfilter

#如果系统没有br_netfilter模块则执行下面的新增命令,如有则忽略。

modprobe br_netfilter

#永久新增br_netfilter模块:

cat > /etc/rc.sysinit << EOF

#!/bin/bash

for file in /etc/sysconfig/modules/*.modules ; do

[ -x $file ] && $file

done

EOF

cat > /etc/sysconfig/modules/br_netfilter.modules << EOF

modprobe br_netfilter

EOF

chmod 755 /etc/sysconfig/modules/br_netfilter.modules

sysctl -p /etc/sysctl.d/k8s.conf

yum源配置

#备份

mv /etc/yum.repos.d/CentOS-Linux-BaseOS.repo /etc/yum.repos.d/CentOS-Linux-BaseOS.repo.bak

#下载aliyun源

wget -O /etc/yum.repos.d/CentOS-Linux-BaseOS.repo https://mirrors.aliyun.com/repo/Centos-8.repo

#新建k8s yum仓库配置,内容如下:

cat /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

#更新缓存

yum clean all

yum makecache

部署

Docker

所有除了客户机选装,其他都要安装。

此处演示使用了ansible分发命令,xshell可以用撰写栏摘抄-a 后''单引号的内容

ansible k8s -m shell -a 'yum install -y yum-utils'

ansible k8s -m shell -a 'yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo'

ansible k8s -m shell -a 'yum install docker-ce docker-ce-cli containerd.io'

ansible k8s -m shell -a 'yum install -y docker-ce docker-ce-cli containerd.io'

ansible k8s -m shell -a 'systemctl start docker'

ansible k8s -m shell -a 'systemctl enable docker'

ansible k8s -m shell -a 'docker -v'

#docker -v输出

work01 | CHANGED | rc=0 >>

Docker version 20.10.10, build b485636

work02 | CHANGED | rc=0 >>

Docker version 20.10.10, build b485636

master03 | CHANGED | rc=0 >>

Docker version 20.10.10, build b485636

master01 | CHANGED | rc=0 >>

Docker version 20.10.10, build b485636

master02 | CHANGED | rc=0 >>

Docker version 20.10.10, build b485636

work03 | CHANGED | rc=0 >>

Docker version 20.10.10, build b485636

#编辑daemo.json,配置如下内容:

cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://ltdrddoq.mirror.aliyuncs.com"], #镜像加速,别忘了后面的逗号

"exec-opts": ["native.cgroupdriver=systemd"] #修改Cgroup drivers

}

ansible k8s -m shell -a 'systemctl restart docker'

其中修改cgroup详情见[4]

Keepalived安装

此步骤只需要三台master执行

ansible master -m shell -a 'yum install -y keepalived'

#编辑配置文件如下:

cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak

cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id master01 #ID,设置为主机名 不要重复。

}

vrrp_instance VI_1 {

state MASTER #角色,MASTER是主要的,其他俩个设置为BACKUP

interface ens192 #网卡名

virtual_router_id 51

priority 100 #权重,越大优先级越高,Master01 02 03分别是100 90 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.31.182 #VIP地址,统一固定。

}

}

#分发配置文件,主要ID和角色还有权重需要根据不同主机更改.

ansible master -m shell -a 'mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak'

ansible master -m copy -a 'src=/etc/keepalived/keepalived.conf dest=/etc/keepalived/keepalived.conf'

#启动并配置开机自启

ansible master -m shell -a 'service keepalived start'

ansible master -m shell -a 'systemctl enable keepalived'

#测试VIP

ip addr | grep 182

inet 192.168.31.182/32 scope global ens192

#出现182代表此主机目前是VIP的载体

Kubernetes

此步骤所有服务器均安装。

yum list kubelet --showduplicates | tail -10 #列出最后10个版本

Repository extras is listed more than once in the configuration

kubelet.x86_64 1.21.3-0 kubernetes

kubelet.x86_64 1.21.4-0 kubernetes

kubelet.x86_64 1.21.5-0 kubernetes

kubelet.x86_64 1.21.6-0 kubernetes

kubelet.x86_64 1.21.7-0 kubernetes

kubelet.x86_64 1.22.0-0 kubernetes

kubelet.x86_64 1.22.1-0 kubernetes

kubelet.x86_64 1.22.2-0 kubernetes

kubelet.x86_64 1.22.3-0 kubernetes

#本次直接安装最新版,所以不需要指定版本号。

ansible k8s -m shell -a 'yum install -y kubelet kubeadm kubectl'

ansible k8s -m shell -a 'systemctl start kubelet'

ansible k8s -m shell -a 'systemctl enable kubelet'

#kubectl命令补全

ansible k8s -m shell -a 'echo "source <(kubectl completion bash)" >> ~/.bash_profile'

ansible k8s -m shell -a 'source .bash_profile'

#如果要指定版本号安装,使用:

yum install kubelet-1.22.0 kubeadm-1.22.0 kubectl-1.22.0

三组件说明

-

Kubectl: 是一个命令行工具用来管理 Kubernetes 集群[5]

-

kubeadm: 用来初始化集群的指令。

-

kubelet:在集群中的每个节点上用来启动 Pod 和容器等。

kubeadm 不能 帮你安装或者管理

kubelet或kubectl,所以你需要 确保它们与通过 kubeadm 安装的控制平面的版本相匹配。 如果不这样做,则存在发生版本偏差的风险,可能会导致一些预料之外的错误和问题。 然而,控制平面与 kubelet 间的相差一个次要版本不一致是支持的,但 kubelet 的版本不可以超过 API 服务器的版本。 例如,1.7.0 版本的 kubelet 可以完全兼容 1.8.0 版本的 API 服务器,反之则不可以。有关安装

kubectl的信息,请参阅安装和设置 kubectl文档。

预下载镜像

所有服务器均需要进行此步骤。

注意:如果镜像下载失败或者标签及名称不对,将会造成后续的master初始化及node加入出现错误。

因为k8s初始化的时候需要一些镜像,都是托管在谷歌上面由于国内的特殊原因无法访问。可以先去阿里云镜像站下载所需要的镜像,再设置标签。

#编辑脚本内容如下:

cat image.sh

#!/bin/bash

url=registry.cn-hangzhou.aliyuncs.com/google_containers

version=v1.22.3

images=(`kubeadm config images list --kubernetes-version=$version|awk -F '/' '{print $2}'`)

for imagename in ${images[@]} ; do

docker pull $url/$imagename

docker tag $url/$imagename k8s.gcr.io/$imagename

docker rmi -f $url/$imagename

done

chmod +x image.sh

./image.sh

docker images

#输出:确认镜像是否完整且信息是否正确:

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-apiserver v1.22.3 53224b502ea4 3 weeks ago 128MB

k8s.gcr.io/kube-scheduler v1.22.3 0aa9c7e31d30 3 weeks ago 52.7MB

k8s.gcr.io/kube-controller-manager v1.22.3 05c905cef780 3 weeks ago 122MB

k8s.gcr.io/kube-proxy v1.22.3 6120bd723dce 3 weeks ago 104MB

hello-world latest feb5d9fea6a5 8 weeks ago 13.3kB

k8s.gcr.io/etcd 3.5.0-0 004811815584 5 months ago 295MB

k8s.gcr.io/coredns/coredns v1.8.4 8d147537fb7d 5 months ago 47.6MB #注意此镜像路径是有俩层的,如果上述脚本打标签错了,手动更改一下。

k8s.gcr.io/pause 3.5 ed210e3e4a5b 8 months ago 683kB

使用kubeadm初始化集群

初始化及以下步骤只需要在 master01上面进行

#1.22.3集群配置文件如下:

cat kubeadm-config-v3.yaml

apiVersion: kubeadm.k8s.io/v1beta3 #API版本

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.31.132

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

imagePullPolicy: IfNotPresent

name: master01

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

certSANs: #填写所有k8s角色的主机名和其IP,包括VIP的

- master01

- master02

- master03

- work01

- work02

- work03

- 192.168.31.132

- 192.168.31.189

- 192.168.31.245

- 192.168.31.54

- 192.168.31.131

- 192.168.31.221

- 192.168.31.182 #VIP地址

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 192.168.31.182:6443

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: k8s.gcr.io

kind: ClusterConfiguration

kubernetesVersion: v1.22.3

networking:

podSubnet: 10.244.0.0/16 #pod虚拟网络,需要和下面要安装的网络组件flannel一致,此处是默认的

serviceSubnet: 10.96.0.0/12 #集群虚拟网络,访问的入口。

scheduler: {}

#开始初始化

kubeadm init --config=./kubeadm-config-v3.yaml --ignore-preflight-errors=all

#以下输出记得报错:

[init] Using Kubernetes version: v1.22.3

[preflight] Running pre-flight checks

[WARNING FileExisting-tc]: tc not found in system path

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master01 master02 master03 work01 work02 work03] and IPs [10.96.0.1 192.168.31.132 192.168.31.182 192.168.31.189 192.168.31.245 192.168.31.54 192.168.31.131 192.168.31.221]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master01] and IPs [192.168.31.132 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master01] and IPs [192.168.31.132 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 12.505519 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.22" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master01 as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master01 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: gowjtv.8v7xhrdzd4hfe7v5

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

#其他集群加入使用此命令。

kubeadm join 192.168.31.182:6443 --token gowjtv.8v7xhrdzd4hfe7v5 \

--discovery-token-ca-cert-hash sha256:801187cb551e875435bd255ec4b9d1bc37605cd73ad728a50fa206caf6ce724b \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

#其他工作节点加入使用此命令。

kubeadm join 192.168.31.182:6443 --token gowjtv.8v7xhrdzd4hfe7v5 \

--discovery-token-ca-cert-hash sha256:801187cb551e875435bd255ec4b9d1bc37605cd73ad728a50fa206caf6ce724b

错误处理

- 如果中间出现了错误请使用

kubeam reset重新开始。 - 可以使用

kubeadm config print init-defaults[6]命令打印出默认配置。 - 上述配置文件适用于1.22.3,如果需要更新配置文件,可以使用官方的根据进行更新。

kubeadm config migrate --old-config kubeadm-config-v3.yaml[6:1]

加载环境变量

只需要master01执行

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

source .bash_profile

安装网络组件flannel

只需要master01执行

因为网络原因,不能直接下载flannel.yml,另存为以下内容。

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

image: rancher/mirrored-flannelcni-flannel-cni-plugin:v1.0.0

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

image: quay.io/coreos/flannel:v0.15.1

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.15.1

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

保存为kube-flannel.yml

#注意配置文件中的一段,默认是10.244.0.0/16和kubeam初始化中的保持一致。

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

#安装flannel

kubectl apply -f kube-flannel.yml

其他master加入集群

分发证书

在master01上执行

配置免密登录:

#生成公钥

ssh-keygen -t rsa -P ''

#分发公钥到master02 03

ssh-copy-id -i ~/.ssh/id_rsa.pub root@master02

ssh-copy-id -i ~/.ssh/id_rsa.pub root@master03

分发证书:

#另存为此脚本,执行.

cat cert-main-master.sh

USER=root

CONTROL_PLANE_IPS="master02 master03"

for host in ${CONTROL_PLANE_IPS}; do

scp /etc/kubernetes/pki/ca.crt "${USER}"@$host:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/ca.key "${USER}"@$host:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.key "${USER}"@$host:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.pub "${USER}"@$host:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.crt "${USER}"@$host:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.key "${USER}"@$host:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/etcd/ca.crt "${USER}"@$host:/etc/kubernetes/pki/etcd/ca.crt

scp /etc/kubernetes/pki/etcd/ca.key "${USER}"@$host:/etc/kubernetes/pki/etcd/ca.key

done

chmod +x ./cert-main-master.sh

./cert-main-master.sh

加入集群

在master02和03上面运行

#使用master01初始化生成token加入:

kubeadm join 192.168.31.182:6443 --token gowjtv.8v7xhrdzd4hfe7v5 \

--discovery-token-ca-cert-hash sha256:801187cb551e875435bd255ec4b9d1bc37605cd73ad728a50fa206caf6ce724b \

--control-plane

#输出:

[preflight] Running pre-flight checks

[WARNING FileExisting-tc]: tc not found in system path

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[preflight] Running pre-flight checks before initializing the new control plane instance

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master01 master02 master03 work01 work02 work03] and IPs [10.96.0.1 192.168.31.189 192.168.31.182 192.168.31.132 192.168.31.245 192.168.31.54 192.168.31.131 192.168.31.221]

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master02] and IPs [192.168.31.189 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master02] and IPs [192.168.31.189 127.0.0.1 ::1]

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[certs] Using the existing "sa" key

[kubeconfig] Generating kubeconfig files

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[check-etcd] Checking that the etcd cluster is healthy

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[etcd] Announced new etcd member joining to the existing etcd cluster

[etcd] Creating static Pod manifest for "etcd"

[etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s

The 'update-status' phase is deprecated and will be removed in a future release. Currently it performs no operation

[mark-control-plane] Marking the node master02 as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master02 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

配置环境变量

master 02 03执行

scp master01:/etc/kubernetes/admin.conf /etc/kubernetes/

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

source .bash_profile

其他node加入集群

work01 02 03上面执行

#使用master01初始化生成token加入:

kubeadm join 192.168.31.182:6443 --token gowjtv.8v7xhrdzd4hfe7v5 \

--discovery-token-ca-cert-hash sha256:801187cb551e875435bd255ec4b9d1bc37605cd73ad728a50fa206caf6ce724b

#输出省略

测试

在任意master上运行

kubectl get nodes

#输出检查各节点是否正常

NAME STATUS ROLES AGE VERSION

master01 Ready control-plane,master 3d2h v1.22.3

master02 Ready control-plane,master 3d2h v1.22.3

master03 Ready control-plane,master 3d2h v1.22.3

work01 Ready <none> 3d2h v1.22.3

work02 Ready <none> 3d2h v1.22.3

work03 Ready <none> 3d2h v1.22.3

kubectl get po -o wide -n kube-system

#检查各组件是否正常

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-78fcd69978-2zb6j 1/1 Running 1 (7h2m ago) 3d2h 10.244.0.5 master01 <none> <none>

coredns-78fcd69978-cpdxv 1/1 Running 1 (7h2m ago) 3d2h 10.244.0.4 master01 <none> <none>

etcd-master01 1/1 Running 3 (7h2m ago) 3d2h 192.168.31.132 master01 <none> <none>

etcd-master02 1/1 Running 1 3d2h 192.168.31.189 master02 <none> <none>

etcd-master03 1/1 Running 5 3d2h 192.168.31.245 master03 <none> <none>

kube-apiserver-master01 1/1 Running 3 (7h2m ago) 3d2h 192.168.31.132 master01 <none> <none>

kube-apiserver-master02 1/1 Running 6 3d2h 192.168.31.189 master02 <none> <none>

kube-apiserver-master03 1/1 Running 3 3d2h 192.168.31.245 master03 <none> <none>

kube-controller-manager-master01 1/1 Running 2 (7h2m ago) 3d2h 192.168.31.132 master01 <none> <none>

kube-controller-manager-master02 1/1 Running 0 3d2h 192.168.31.189 master02 <none> <none>

kube-controller-manager-master03 1/1 Running 0 3d2h 192.168.31.245 master03 <none> <none>

kube-flannel-ds-dgg82 1/1 Running 0 3d2h 192.168.31.221 work03 <none> <none>

kube-flannel-ds-gfddz 1/1 Running 1 (3d2h ago) 3d2h 192.168.31.189 master02 <none> <none>

kube-flannel-ds-gqgxl 1/1 Running 0 3d2h 192.168.31.54 work01 <none> <none>

kube-flannel-ds-lftmj 1/1 Running 0 3d2h 192.168.31.131 work02 <none> <none>

kube-flannel-ds-px7wl 1/1 Running 0 3d2h 192.168.31.245 master03 <none> <none>

kube-flannel-ds-tdfhl 1/1 Running 1 (7h2m ago) 3d2h 192.168.31.132 master01 <none> <none>

kube-proxy-gl66g 1/1 Running 1 (7h2m ago) 3d2h 192.168.31.132 master01 <none> <none>

kube-proxy-hgvls 1/1 Running 0 3d2h 192.168.31.245 master03 <none> <none>

kube-proxy-hlshp 1/1 Running 0 3d2h 192.168.31.54 work01 <none> <none>

kube-proxy-mvfv4 1/1 Running 0 3d2h 192.168.31.221 work03 <none> <none>

kube-proxy-nds5n 1/1 Running 0 3d2h 192.168.31.189 master02 <none> <none>

kube-proxy-tjjbq 1/1 Running 0 3d2h 192.168.31.131 work02 <none> <none>

kube-scheduler-master01 1/1 Running 5 (7h2m ago) 3d2h 192.168.31.132 master01 <none> <none>

kube-scheduler-master02 1/1 Running 2 3d2h 192.168.31.189 master02 <none> <none>

kube-scheduler-master03 1/1 Running 1 3d2h 192.168.31.245 master03 <none> <none>

Dashboard搭建

在master01上执行

官网[7]

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.4.0/aio/deploy/recommended.yaml

#默认Dashboard只能集群内部访问,修改Service为NodePort类型,暴露到外部30001端口

#加入下面的15行暴露3001端口,跟18行,对应配置文件的43跟46行

vi recommended.yaml

...

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

nodePort: 30001

selector:

k8s-app: kubernetes-dashboard

type: NodePort

...

kubectl apply -f recommended.yaml

kubectl get pods -n kubernetes-dashboard

#输出

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-c45b7869d-27tj6 1/1 Running 0 3d1h

kubernetes-dashboard-576cb95f94-ppfqn 1/1 Running 0 3d1h

#新增管理员帐号

kubectl create serviceaccount dashboard-admin -n kube-system

#输出:

serviceaccount/dashboard-admin created

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

#输出:

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

#输出:

Name: dashboard-admin-token-4qxbs

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: bd869664-73bd-43c7-934f-6f8d33075c3e

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1099 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6InBpalB3U0VvQVBjeFFFbWdpMVVnNlVRclRFOUpNT2lscHFUcFl2TTNicXMifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tNHF4YnMiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiYmQ4Njk2NjQtNzNiZC00M2M3LTkzNGYtNmY4ZDMzMDc1YzNlIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.y7VfhIb-0JOAAHsew0OBMzDr4acQ6AkSpQKcpfdXH4QXr43Gkelfi413Xg3tPP68GLqA1tMhYTFKeb3U0zJekJuIHyv68_NWK2hGcq3ooh93_gFgQhK-JiRF9FtLvvGgB8bCHyf7Zs8zdMhs76uRG608ryQechKeKJjh-twk2Idbmx3AURD5BIePjaGofk03A4IRI-m_PUSKLxG0dupwyXoHEsv9Hpc6_A7hMG5209vtF0VlDIKlcD91QCk8tpb-OLuDYjA3jf5xP9qnwTXg72uObe5g0kQPcHC2T7pLKyL8rKg4UdhamPKEC05Nyr_aHirJMDPZ0tEE_g7tt-MzFQ

#注意保存token

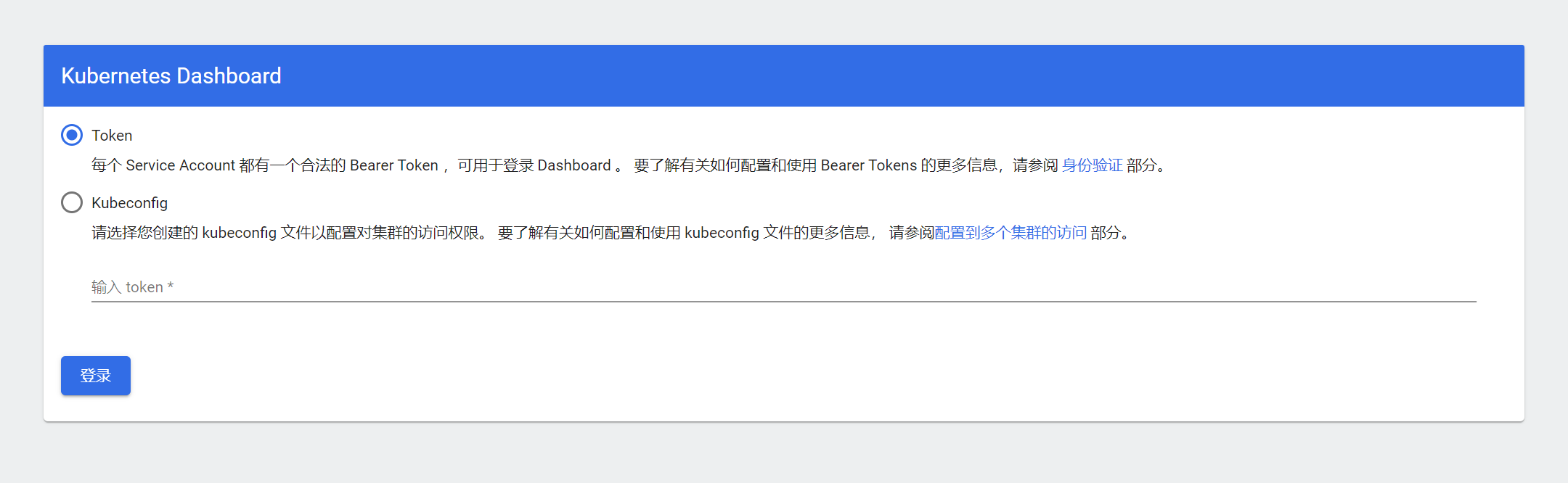

使用浏览器访问https://VIP:30001

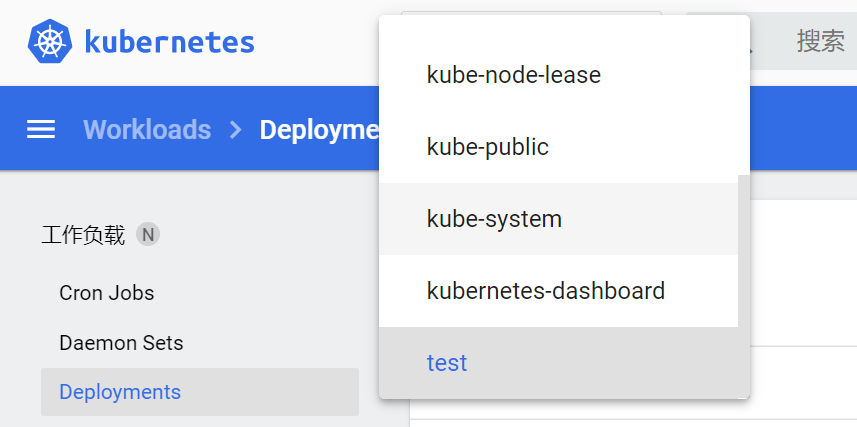

输入token-登录-选择全部命名空间,如下图即正常。

测试高可用

- 将master01关机或者关闭网络,模拟master01宕机的情况。

- 登录master02查看VIP是否正确漂移到本机。

- 登录控制台页面,见有一个主机故障,但是不影响控制台使用。

- 启用master01,因为master01权重最高故VIP将会回到master01上面。查看VIP地址是否回归。

- 登录控制台检查各功能正常。

作者

此文档只是安装的时候笔记,仅供本人参考,在生产环境使用请自行斟酌。转载注明来源

Centos8.3部署k8sv1.22.3.md - good good study,day day up~

参考资料:

kubeadm快速搭建K8s集群 基于v1.22.2版本 - 努力吧阿团 - 博客园